Similar to previous identity management issues with SharePoint (I hate you FIM!), I just ran into another issue the other day. There is a requirement to have SharePoint 2010 be the place where users can managed their profile information, but the most important thing is to have images sync to AD so they can be used in Lync and Outlook.

The guys on the ground were pulling their hair out, as they had followed the instructions from two corroborating sites. Unfortunately, even with all of that setup, images were not being successfully added to AD.

Knowing how much fun FIM is, I did a bit of banging prior to arriving on site and found an article that sounded very similar to the issues they were having. Turns out it was the answer, but I’m going to duplicate a bit of it here just in case it disappears.

- You have correctly configured FIM to sync the images correctly as per the TechNet article linked above (“sites”).

- Looking in the IIS logs of the mysite (or whatever name is accurate) web app, you see 401.1 214807254 and/or 214807252 errors on anonymous users accessing the thumbnail jpegs.

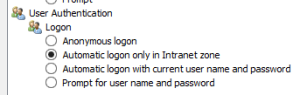

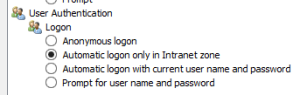

What you need to do is log into the box where FIM is running as the FIM user sync account. From there, add your mysite URL to the intranet zone in IE. Re-run the sync and it should work.

The reason is that the error IIS log error is because FIM is not passing the credentials as it is being challenged. By adding the mysite to the intranet zone, it will automatically send credentials and not wait to be prompted (unless a GPO has overridden this setting).