Well, it’s good to see that User Profile Sync can be better in 2010 than it was in 2007. However, there are definitely some issues still.

The first one, which is something we just noticed was that the User Profile Sync jobs were constantly failing. Unfortunately, there isn’t really a good way to know without going into the MIISClient program to look at the errors. Basically, if you think, for whatever reason, profile sync is not working, open up the MIISClient.exe (Program FilesMicrosoft Office Servers14.014.0Synchronization ServiceUIShell) as the farm account and take a look to see if everything is a success.

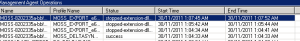

For us, we were seeing all the MOSS-{guid} jobs failing with the error stopped-extension-dll-exception as you can see below.

Based on the lovely error message, I’m still amazed that I was able to isolate this issue (event logs reported that CA was being accessed via a non-registered name). However, it turns out it is because of alternate access mappings (AAMs) for the central admin (CA) website. Normally, SharePoint sets up the AAM for CA as the machine name you first install SharePoint on to. However, we changed the AAM to be a more friendly name.

When you update the “Public URL for Zone” for the CA website, it does not propagate the change into the MIISClient. This causes the MIISClient to not correctly access the CA APIs for the user profile sync (or at least I am imagining this is the case).

Fix it with the following steps:

- MIISClient.exe as the farm account.

- Tools > Management Agents (or click the Management Agents in the bar)

- Right-click on the MOSS-{guid} management agent and select Properties

- Go to the Configure Connection Information section in the left-hand pane

- In the connection information box, change the Connect To URL to be the same URL as listed as the “Public URL for Zone” for your CA in the AAM configuration.

- Re-enter the farm account username and password for good measure

- Save the configuration

- Run a full profile sync from CA