With the recent-ish announcement of Bitwarden being able to store SSH keys, I’ve been playing around to get it to work in my WSL2 Ubuntu host. While I normally use a windows machine, I do a lot in WSL2 for dev & ops.

As 1Password has had this functionality for awhile, many of the references are from that (or even the built-in openssh functionality of windows).

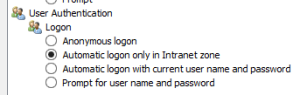

Configure and enable the the windows ssh-agent functionality as per the Bitwarden instructions. Once that is complete, you will be able to access keys stored in Bitwarden from Powershell via

ssh-add -LIn powershell, install npiperelay (I used chocolatey, but you can use whatever, just get where the exe is installed so you can modify the script later)

choco install npiperelayIn WSL2, install socat

sudo apt install socatIn WSL2 create a script that will rebind the ssh-agent. I save this as ~/scripts/agent-bridge.sh.

export SSH_AUTH_SOCK=$HOME/.ssh/agent.sock

ss -a | grep -q $SSH_AUTH_SOCK

if [ $? -ne 0 ]; then

rm -f $SSH_AUTH_SOCK

( setsid socat UNIX-LISTEN:$SSH_AUTH_SOCK,fork EXEC:"/mnt/c/ProgramData/chocolatey/lib/npiperelay/tools/npiperelay.exe -ei -s //./pipe/openssh-ssh-agent",nofork & ) >/dev/null 2>&1

fiMake the script executable

chmod +x ~/scripts/agent-bridge.shEdit your ~/.bashrc and add the following line at the end

source ~/scripts/agent-bridge.shRestart your shell and then you should be able to list your current keys with ssh-add -l!