Boo, just got the email today that CrashPlan is leaving the home market. After I don’t know how many years, it looks like I’ll have to find another provider. It looks like there are a few, but with no computer-to-computer options baked in all will be a step back. *sigh*

Boo, just got the email today that CrashPlan is leaving the home market. After I don’t know how many years, it looks like I’ll have to find another provider. It looks like there are a few, but with no computer-to-computer options baked in all will be a step back. *sigh*

**Update 8/23/2017**

I’ve been following a lot of different threads on this. Sadly, there are no direct competitors. Turns out CrashPlan (even with the crappy Java app) was the best for a lot of reasons including the following:

- Unlimited – I am not a super heavy user with ~1TB of total storage spanning back for the last 10 years of use/versions, but it’s always nice to know it’s there.

- Unlimited versions – This is key and has saved my bacon a few times after a migration (computer/drive/other backup to NAS) and you think you have everything, but turns out you don’t until a year later when you’re looking for it.

- Family plan (i.e. more than one computer) – nice as I have 3 machines, plus my NAS that I can

- Peer-to-peer – one backup solution to rule them all that works on remote networks. Unfortunately, it uses gross ports so doesn’t work anywhere (like in corporate places) and you can’t shove peer-to-peer backups to the cloud, those peers have to upload it directly.

- Ability to not backup on specific networks…like when I’m tethered to my phone.

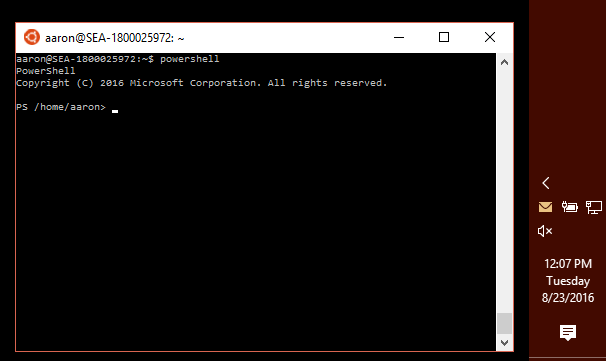

Total sidebar, but speaking of crappy Java apps, I had just migrated to using a docker image of CrashPlan too due the continued pain of updating it with Patter’s awesome SPK. Yay to running everything in docker now instead of native Synology apps.

My current setup consists of 3 Windows machines and a Synology NAS. I had the CrashPlan family account so each of those machines would sync to the cloud, and all the windows machines would sync to the NAS. Nothing crazy, and yes, I know I was missing a 3rd location for NAS storage for those following the 3-2-1 method.

The other cloud options I’ve looked at so far:

- Carbonite – no linux client, so non-starter as that’s where I’d like to centralize my data. I used to use them before CrashPlan and wasn’t a fan. I know things change in 10 years, but…

- Backblaze – I want to like Backblaze, but no linux client and limited versions (that they say they are working on – see comments section) keeps me away. They do have B2 integrations via 3rd party backup/sync partners. After doing some digging, they all look hard. I have setup a CloudBerry docker image to play with later and see how good it could be. Using B2 storage, it would be similar price as CrashPlan as I don’t have tons of data.

- iDrive – Linux client (!) and multiple hosts, but only allows 32 versions, and dedupe seems to be missing so I’m not sure what that would mean for my ~1TB of data. They have a 2TB plan for super cheap right now ($7 for the first year), which could fill all my needs.

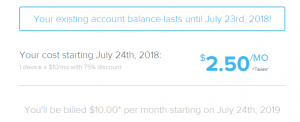

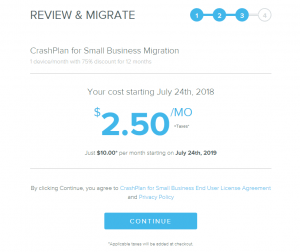

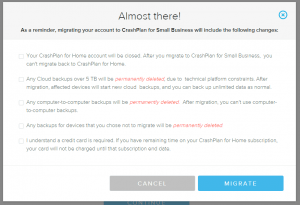

- CrashPlan Small Business – Same as home, but a single computer and no peer-to-peer.

So where does that leave me? I’m hopefully optimistic about companies getting more feature parity, and thankfully my subscription doesn’t expire until July of 2018. Therefore, while I’m doing some work, I’m firmly in the “wait and see” camp at this point. However, if I were to move right now, this is what my setup would look like:

- Install Synology Cloud Station Backup and configure the 3 Windows systems to backup to the Synology NAS. Similar to CrashPlan, I can uPNP a port through the Firewall for external connectivity (I can even use 443 if I really want/need to). This is my peer-to-peer backup and is basically like-for-like with Crashplan peer-to-peer. This stores up to 32 versions of files, which while not ideal, is ok considering…

- Upgrade to CrashPlan Small Business on the NAS. While I’m not thrilled about the way this was handled, I understand it (especially seeing the “OMG I HAVE 30TB IN PERSONAL CRASHPLAN” redditor posts) and that means I don’t have to reupload anything. Send both the Cloud Station Backups and other NAS data to CrashPlan. This gets me the unlimited versions, plus I have 3-2-1 protections for my laptops/desktops.

- Use Synology Cloud Sync (not a backup) or CloudBerry to B2 for anything I deem needs that extra offsite location for the NAS. This would be an improvement to my current setup, and I could be more selective about what goes there to keep costs way down.

Hopefully this helps others, and I’ll keep updating this post based on what I see/move towards. Feel free to add your ideas into the comments too.

Just saw this announcement from MSFT. Could be an interesting archival strategy if tools start to utilize it – https://azure.microsoft.com/en-us/blog/announcing-the-public-preview-of-azure-archive-blob-storage-and-blob-level-tiering/

**Update 10/11/2017**

A quick update on some things that have changed. I’ve moved away from Comcast, and now have Fiber! That means, no more caps (and 1Gbps speeds), so I’m more confident to go with my ideas above. So far this is what I’ve done:

- Setup Synology Cloud Backup. To ensure I get the best coverage everywhere, I’ve created a new domain name and have mapped 443 externally to the internal synology software’s port. When setting it up in the client, you need to specify <domain>:443, otherwise it attempts to use the default port (it even works with 2FA). CPU utilization isn’t great on the client software, but that’s primarily because the filtering criteria is great (if you just add your Windows user folder, all the temp internet files and caches constantly get uploaded). It would be nice if you could filter file paths too, similar to how CrashPlan does it – https://support.code42.com/CrashPlan/4/Troubleshooting/What_is_not_backing_up (duplicating below in case that ever goes away). I’ll probably file a ticket about that and increasing the version limit…just because.

- I still have CrashPlan Home installed on most of my computers at this point as I migrate, but now that I know Synology backup works, I’ll start decommissioning it (yay to lots of java-stolen memory back!).

- I’ve played around with a cloudberry docker, but I’m not impressed. I still want to find something else for my NAS stuff to maintain 3 copies (it would be <50GB of stuff). Any ideas?

CrashPlan’s Windows Exclusions – based on Java Regex

.*/(?:42|\d{8,}).*/(?:cp|~).*

(?i).*/CrashPlan.*/(?:cache|log|conf|manifest|upgrade)/.*

.*\.part

.*/iPhoto Library/iPod Photo Cache/.*

.*\.cprestoretmp.*

.*/Music/Subscription/.*

(?i).*/Google/Chrome/.*cache.*

(?i).*/Mozilla/Firefox/.*cache.*

.*/Google/Chrome/Safe Browsing.*

.*/(cookies|permissions).sqllite(-.{3})?

.*\$RECYCLE\.BIN/.*

.*/System Volume Information/.*

.*/RECYCLER/.*

.*/I386.*

.*/pagefile.sys

.*/MSOCache.*

.*UsrClass\.dat\.LOG

.*UsrClass\.dat

.*/Temporary Internet Files/.*

(?i).*/ntuser.dat.*

.*/Local Settings/Temp.*

.*/AppData/Local/Temp.*

.*/AppData/Temp.*

.*/Windows/Temp.*

(?i).*/Microsoft.*/Windows/.*\.log

.*/Microsoft.*/Windows/Cookies.*

.*/Microsoft.*/RecoveryStore.*

(?i).:/Config\\.Msi.*

(?i).*\\.rbf

.*/Windows/Installer.*

.*/Application Data/Application Data.*

(?i).:/Config\.Msi.*

(?i).*\.rbf

(?i).*/Microsoft.*/Windows/.*\.edb

(?i).*/Google/Chrome/User Data/Default/Cookies(-journal)?", "(?i).*/Safari/Library/Caches/.*

.*\.tmp

.*\.tmp/.*

Boo, just got the email today that

Boo, just got the email today that